MCP Protocol in the AI Era: A Developer's Guide

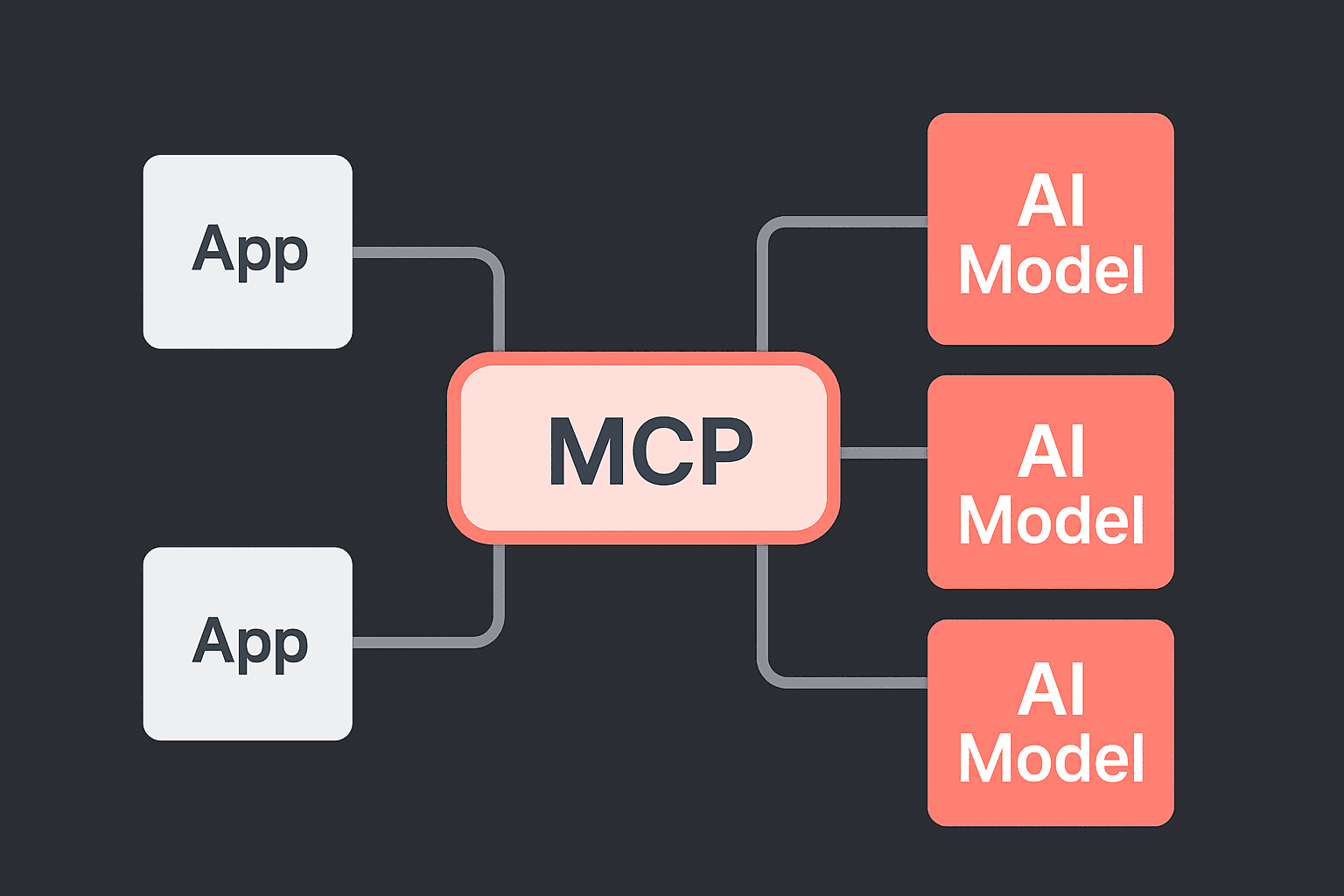

As artificial intelligence becomes increasingly central to software development, the need for standardized ways to interact with AI models has never been greater. Enter the Model Context Protocol (MCP) - a game-changing protocol that's revolutionizing how we integrate AI models into our applications.

What is MCP? 🤔

The Model Context Protocol is an open standard that defines how applications communicate with AI models. Think of it as the "HTTP of AI" - a standardized way for your application to send requests to and receive responses from any AI model, regardless of where it's hosted or who created it.

Why MCP Matters in the AI Era 🌟

Several key factors make MCP particularly relevant today:

- Model Agnostic: Switch between different AI models without changing your application code

- Standardized Communication: Consistent way to handle prompts, context, and responses

- Vendor Independence: Freedom to use any AI provider that supports the protocol

- Better Resource Management: Efficient handling of context windows and token usage

Getting Started with MCP 🚀

Let's look at a basic example of using MCP in a Node.js application:

import { MCPClient } from '@mcp/client'

const client = new MCPClient({

endpoint: 'https://your-mcp-server.com',

apiKey: 'your-api-key'

})

// Basic completion request

const completion = await client.complete({

prompt: 'Explain quantum computing in simple terms',

model: 'gpt-4',

maxTokens: 100

})

console.log(completion.text)

Key MCP Concepts 📚

1. Context Management

MCP excels at managing context efficiently:

const conversation = client.createConversation()

// Add context that persists across messages

conversation.addContext({

role: 'system',

content: 'You are a helpful programming assistant'

})

// Send messages and get responses

const response = await conversation.sendMessage(

'How do I implement a binary search?'

)

2. Model Switching

One of MCP's strongest features is easy model switching:

// Using GPT-4

await client.complete({

model: 'gpt-4',

prompt: 'Complex task...'

})

// Switch to Claude

await client.complete({

model: 'claude-3',

prompt: 'Same task...'

})

3. Stream Management

MCP handles streaming responses elegantly:

const stream = await client.completeStream({

prompt: 'Write a long story...',

model: 'gpt-4'

})

for await (const chunk of stream) {

console.log(chunk.text)

}

Best Practices 💡

- Always Handle Rate Limits

try {

const response = await client.complete({

prompt: 'My prompt',

retry: true,

maxRetries: 3

})

} catch (error) {

if (error.code === 'rate_limit_exceeded') {

// Handle rate limiting

}

}

- Manage Context Windows Efficiently

const conversation = client.createConversation({

maxTokens: 4000,

pruneStrategy: 'sliding_window'

})

- Use Type-Safe Responses

interface CustomResponse {

answer: string

confidence: number

}

const response = await client.complete<CustomResponse>({

prompt: 'What is 2+2?',

responseFormat: {

schema: {

type: 'object',

properties: {

answer: { type: 'string' },

confidence: { type: 'number' }

}

}

}

})

Setting Up Your Own MCP Server 🛠️

Running your own MCP server gives you full control:

- Install the Server

npm install @mcp/server

- Basic Configuration

import { MCPServer } from '@mcp/server'

const server = new MCPServer({

port: 3000,

models: ['gpt-4', 'claude-3'],

rateLimit: {

windowMs: 15 * 60 * 1000,

max: 100

}

})

server.start()

The Future of MCP 🔮

The MCP ecosystem is growing rapidly, with developments in:

- Multi-model orchestration

- Advanced context management

- Improved type safety

- Enhanced security features

Conclusion

MCP is becoming the de facto standard for AI model integration, offering a clean, efficient, and future-proof way to work with AI models. Whether you're building a simple chatbot or a complex AI-powered application, understanding and implementing MCP will give you a significant advantage in the AI era.